Écouter des articles plutôt que les lire, parce que nous sommes en voiture, les mains prises à la cuisine ou en pleine séance de sport : si l'idée a séduit toute l'industrie au moment de l'explosion des enceintes vocales, la réalité a, soyons francs, franchement déçu. Voix métallique, erreurs de prononciation, ton monocorde : nos oreilles ont beaucoup saigné. Mais ça, c'était en 2017. Les évolutions fulgurantes des technologies de synthèse vocale ont depuis rendu l'expérience acceptable et même… plébiscitée.

Début mars 2024, le quotidien français Le Monde a lancé dans ses apps mobiles la version audio de l'ensemble de ses articles, une fonctionnalité réservée à ses abonnés. J'ai rencontré Lomig Coulon, Lead Product Manager, et Julie Lelièvre, Responsable Adjointe Audience, qui ont raconté à The Audiencers la genèse du projet, son développement et ses premiers résultats.

Bonjour Lomig, Julie. Pour commencer, pourriez-vous nous expliquer quand et comment ce projet de version audio est apparu dans vos roadmaps ?

Julie et Lomig : Bonjour The Audiencers !

Bien sûr, ces dernières années nous voyions que l’usage de l’audio en France via les podcasts se développait, et qu’aux Etats-Unis et Royaume-Uni, certains médias lançaient la lecture de leurs articles par des comédiens. On surveillait cela, on notait la tendance… sans forcément travailler le sujet.

C’est en 2022, quand nous démarrons la refonte de notre application mobile La Matinale, que le projet prend corps. La mission de cette app est de faire gagner du temps aux lecteurs, via une sélection d’actu plus réduite. L’audio répond à ce même besoin de gain de temps, nous avons donc choisi de nous lancer !

D’autre part, dans nos formulaires de désabonnement, beaucoup d’abonnés nous disaient qu’ils adoraient Le Monde, mais qu’ils n’avaient pas le temps de lire, avec cette impression de ne pas profiter de leur abonnement.

Notre position a alors été :

“S’ils n’ont pas le temps de nous lire, ils auront peut-être le temps de nous écouter !”

Parlons objectifs. À quoi sert le développement de cette fonctionnalité ?

Nous en identifions deux :

- D’abord la rétention des abonnés : pousser à l’usage, à la consommation des contenus ;

- Et aussi du recrutement, en faisant la promotion de ce service réservé aux abonnés seulement.

En 2022, le périmètre du projet, c’est uniquement sur l’app La Matinale, exact ?

Oui, effectivement. La Matinale c’est un format fini, avec des utilisateurs engagés et un rendez-vous déjà installé. Le fait que ce soit une sélection finie permet de créer une playlist audio de façon évidente. Les lecteurs font leur sélection au sein des 20 articles, et ainsi ils se font leur propre sélection à écouter sur leur trajet du matin.

Il y a aussi moins d’audience sur La Matinale que sur notre application principale Le Monde , ce qui en fait un terrain plus propice à l’expérimentation et à l’innovation.

Si nous avons opté pour une approche itérative et d’abord restreint cette fonctionnalité à La Matinale, nous avons dès le départ conçu le player et le mécanisme de transcription des articles pour êtres re-utilisable sur l’ensemble de nos articles et dans l’application principale Le Monde

Au démarrage, faites-vous une distinction articles gratuits / payants, ou lecteurs abonnés / non-abonnés ?

Nous avons beaucoup réfléchi à ce sujet, entre renforcer la valorisation de notre abonnement en y réservant la fonctionnalité, et faire découvrir cette nouvelle manière façon de s’informer au plus grand nombre.

C’est pourquoi quand nous lançons la fonctionnalité dans la Matinale, la fonctionnalité audio est disponible pour tous sur les articles gratuits comme payants. Ainsi, les non-abonnés pouvaient écouter les quelques articles gratuits présents chaque jour dans l’édition de la Matinale.

Finalement, quand nous avons déployé le service sur l’appli principale Le Monde, nous l’avons réservé aux abonnés seulement. Nous avons donc ré-aligné la Matinale sur ce même principe.”

Comment avez-vous choisi la solution technologique de text-to-speech ?

Nous avons rapidement éliminé l’option de lecture par un humain : nous publions plus de 100 articles par jour, nous souhaitions proposer la version audio dès la publication et n’avons donc pas le temps requis pour faire les faire lire par des comédiens, enregistrer les voix, etc. Dans ce contexte, la solution de la voix de synthèse a été évidente.

Nous avons étudié différentes solutions : Murf, Odia, Remixd, Voxygen, Google, ETX Studio, Read Speaker et Microsoft Azure Cognitive Service.

Nous avons fini par choisir la solution de Microsoft, sur le critère premier de la qualité de la voix. À ce moment-là, c’était eux qui avaient la voix française la plus convainquante.

>

Nous continuons de suivre attentivement les progrès des technologies de synthèse vocale, de Microsoft comme celles d’autres acteurs, car dans ce domaine des IA génératives les choses bougent très vite !

À la question de la voix s’ajoute celle de la diffusion. Quel player audio avez-vous choisi ?

Ce projet nous a permis de développer notre propre player natif pour nos applications mobiles. Auparavant, nous utilisions un player web “embed” qui se montrait déceptif à plusieurs niveaux : pas de continuité de lecture hors navigation, peu de fonctionnalités liées au confort de lecture…

Aujourd’hui, nos lecteurs peuvent reduire ou agrandir le player audio, récupérer le lien de l’article qui est en train d’être lu, faire varier la vitesse de lecture et surtout, poursuivre l’écoute en navigation ou lorsque le téléphone est verrouillé.

Nous avons été surpris de constater l’usage de la vitesse de lecture ! Bien plus utilisé que ce que nous pensions…

Concrètement, quel est le processus de transcription des articles écrits vers l’audio ?

En amont, nous avons fait deux choses :

- Créer nos propres voix de synthèse personnalisées avec des acteurs. Nous avons maintenant 4 custom voices “Le Monde”. On a choisi d’alterner les voix d’homme et de femme pour maintenir l’attention au fil des paragraphes ;

- Notre compositrice officielle, Amandine Robillard, a créé différentes nappes sonores pour ajouter une couche musicale à notre text-to-speech, que l’on utilise en introduction de nos sons.

Ensuite, au quotidien :

- Le Monde envoie à Microsoft le texte de chaque article et un certain nombre de réglages. Par exemple, on explique qu’il y a des portions à lire et ne pas lire (comme les intertitres ou ce qui est entre crochets), le nombre de voix à utiliser…

- Microsoft nous renvoie un fichier audio avec nos règles appliquées ;

- Notre outil maison ajoute une introduction “Vous écoutez un article du Monde” et la nappe sonore ;

- Le fichier arrive alors dans Sirius, notre CMS maison, et est publié tel que.

Au début bien sûr, nous écoutions les fichiers reçus un à un, pour corriger notamment la prononciation des noms propres, les chiffres romains, les mots avec tiret… Aujourd’hui ce n’est plus le cas, la version audio est directement mise en production. Nous corrigeons uniquement si les lecteurs nous signalent des erreurs.

Quels cas de prononciation ont été les plus difficile à traiter ?

Nous avons créé notre propre dictionnaire, quelques exemples de cas particulier :

- Le “t” final de “jet d’eau” vs “jets privés”

- Jean le prénom vs jean, le pantalon

- Dix et six (“s” final à prononcer ou non)

Un cas mal traité, typiquement, c’est la lettre X en majuscule, où pour le nouveau nom de Twitter, la voix dit 10. On savait qu’il y aurait quelques erreurs de ce type : on accepte une marge d’imperfection…

Les voix françaises vont encore s’améliorer, mais ce n’est déjà pas si mal. Quelques lecteurs se sont fait prendre et ont cru à des voix humaines !

Toutefois, précisons que ce n’était pas notre intention, il est indiqué clairement dans nos apps qu’il s’agit d’une voix de synthèse.

Quels ont été vos premiers résultats ?

Sur La Matinale, l’engagement des lecteurs a beaucoup augmenté mais comme nous l’observions en post-refonte, difficile de savoir si c’est la feature qui génère de l’engagement, ou l’engagement qui génère l’usage de la feature…

Au printemps 2023, vous décidez de lancer ce service dans l’application principale Le Monde, mais sur une toute petite partie de l’audience…

Effectivement, à ce moment-là on se lance dans un immense A/B test qui aura duré 1 an, dont nous avons suivi le déploiement et le résultats via la plateforme d’analyse produit Amplitude

Concrètement, on a pris 25% de l’audience de l’application principale, et on l’a découpée en 8 cohortes :

- 4 cohortes de controle qui n’ont pas accès à l’audio

- 4 cohortes de tests qui ont accès à l’audio

Et les 8 sont composées de la même façon, pour s’assurer que 2 cohortes suivent le même schéma. Pour confirmer que le test est viable, on a attendu que les 4 cohortes de tests suivent les mêmes variations.

> Voir le webinar du Monde & d’Apmplitude qui présente les contours de cet A/B test d’envergue

D’un point de vue fonctionnel, où est-ce que la fonctionnalité est présentée aux utilisateurs ?

On expose la feature dans la page article, dans la bottom bar, alors que dans La Matinale, le lecteur y a accès dès la sélection des news, soit une page avant.

Mais on se rend compe que dans l’application principale, la feature a du mal à prendre. On se dit qu’elle manque probablement d’exposition, car après avoir cliqué sur le titre de l’article, les utilisateurs sont en démarche de lecture, et pas dans une démarche d’écoute.

On fait quelques tests utilisateurs, et on constate que nos lecteurs n’ont même pas vu l’option audio ! Notre hypothèse principale devient alors que nous devons exposer davantage cette fonctionnalité…

Bien sûr, on a un problème de communication, car comme nous étions en plein A/B test, on ne pouvait pas faire de communication massive, seulement envoyer quelques push in-app.

Comment vous y prenez-vous alors pour la montrer à plus d’utilisateurs ?

On refait donc un push in-app à tous les beta-testeurs, avec un A/B/C/D test sur les textes :

Ci-dessus les 4 versions de nos pushs lancés avec Batch

Et là on voit un bon boost d’usage, avec une stabilité dans le temps.

On constate que le succès de cette feature dépend (aussi) de son exposition !

Donc on décide d’afficher le bouton de lancement de la lecture audio directement dans les pages de flux (homes, home de rubrique…), et là, l’usage explose. On valide donc qu’il y a un engagement pour la fonctionnalité, il fallait juste la mettre en avant !

En mars 2024, vous lancez la fonctionnalité sur 100% de l’audience de votre application principale…

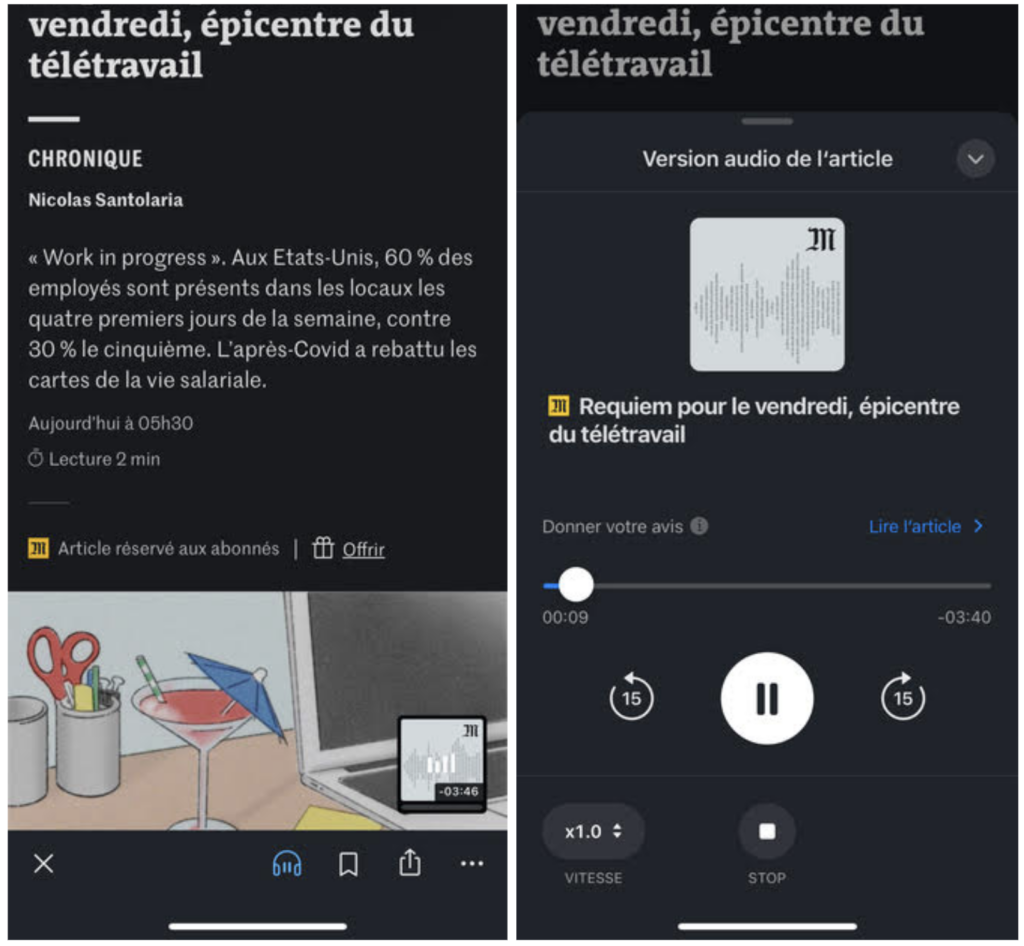

Oui, effectivement. Voici ci-dessous ce que voient nos abonnés – sur les pages de flux (à gauche), sur la page article (à droite) :

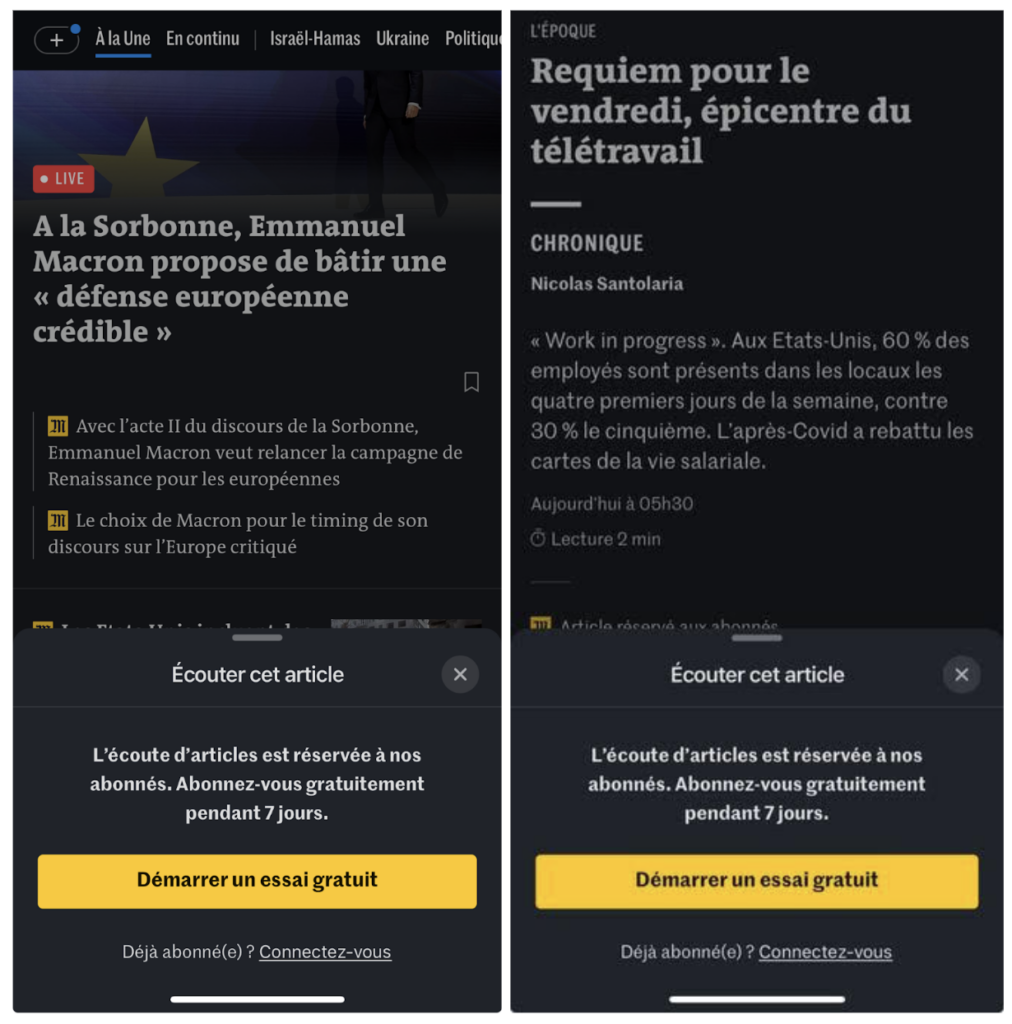

Et ci-après, deux copies d’écran de l’incitation à l’abonnement au lancement de l’écoute depuis une page de flux (à gauche) et depuis une page article (à droite) :

Quels sont les premières statistiques d’usage ?

Parmi les utilisateurs abonnés qui utilisent l’application, 30% utilisent la fonctionnalité au moins une fois par mois depuis son lancement.

Nous constatons que les lecteurs qui utilisent la fonctionnalité sont 1,7 fois plus engagés que les autres lecteurs (en nombre d’articles lus).

Avez-vous déjà observé des effets sur la rétention des abonnés ?

Pour le moment, non, pas vraiment. Ceux qui écoutent ne restent pas forcément abonnés plus longtemps. En revanche, on note un effet sur l’engagement : ceux qui écoutent lisent plus d’articles !

Peut-on tirer le raisonnement jusqu’à dire que s’il y a plus d’articles qui sont lus, alors l’abonnement est plus solide ? Difficile de le dire à ce stade.

Comment étudiez-vous l’usage de vos lecteurs/auditeurs ?

On a un évidemment tout un plan de taggage pour faire du quanti (où est-ce que la feature est utilisée, quelle part de complétion de l’audio…?).

Côté quali, nous avons mis en place un formulaire de feedback dans l’app, et un autre dans le player

Nous recherchons deux types de retours :

- Est-ce qu’il y a un problème sur la qualité de l’audio : chiffres ou non propres mal prononcés, voix trop robotique…?

- Et des feedbacks plus globaux : est-ce que c’est facile à utiliser, que pensez-vous de l’habillage sonore…?

Qu’apprenez-vous ?

D’abord un chiffre : 80% des utilisateurs se disent “satisfaits” ou “très satisfaits” de la fonctionnalité.

Ensuite évidemment, la plupart des verbatims font très plaisir…

“C’est hyper intéressant de pouvoir écouter les longs articles, c’est une super idée !”

“J’écoute beaucoup de podcast et j’apprécie grandement pouvoir écouter mes articles du Monde”

“Bravo ! J’ai rarement vu une aussi bonne intégration d’une voix synthétique ! “

Liés à l’usage

“Tres bonne initiative, permet d’écouter des articles en marchant.”

“Pouvoir écouter des articles sous la douche ou en voiture”

Accessibilité

“Bonjour. Bravo pour cette fonctionnalité, c’est super. Je suis non-voyant et je kiff utiliser en faisant mon sport.”

“Merci pour les dyslexiques qui peuvent enfin vous écouter.”

Abonnement

“Je trouve ça top et c’est pour cette fonctionnalité que je me suis abonné!”

Par contre, on remonte peu d’info sur les lieux de lecture. Est-on plus écoutés en voiture ou en faisant la vaisselle ?

Cherchez-vous à prouver le retour sur investissement de ce développement ?

Cette version audio des articles, c’est surtout une volonté de jouer l’innovation, et une expérimentation. Il s’agit de développer un nouvel usage sur du moyen/long terme, donc on ne s’attend pas à un retour sur investissement en année 1.

Il est à noter que ce projet a été mené grâce a la Google News Initiative, qui nous a permis de financer une large partie des développements initiaux. Pour le moment nous ne prévoyons pas d’autres développements, mais nous avons des coûts d’exploitation (licence Azure et génération des audios avec nos voix Le Monde).

Sur les revenus, comme c’est une fonctionnalité réservée aux abonnés, on peut bien sûr calculer le taux de conversion sur le paywall et suivre à quel point la fonctionnalité participe au recrutement et à la rétention des abonnés. Enfin, il est possible techniquement de monétiser ces audios par la publicité, mais ce n’est pas encore le cas. Toutefois notre régie réfléchit à développer cette possibilité dans le futur.