"Making decisions is easy, what’s hard is making the right decisions."

For one, Netflix is constantly evolving product offerings and quality of experience to improve the value offered to subscribers.

Just look at Netflix in 2010 compared to today:

In 2010, static user interface, limited navigation options and a layout inspired by video rental stores

Fast forward to 2022 and Netflix offers an immersive user interface with a video-forward experience, richer navigation options and beautiful box art that personalizes to the user.

But this transition didn’t happen overnight. It’s required countless decisions. And whilst making decisions is easy, what’s hard is making the right decisions.

How can Netflix be sure that their decisions will lead to a better product experience for existing members and support the acquisition of new ones?

The answer – A/B testing.

As the Netflix Technology blog put it, whilst decisions made in a team are limited to the viewpoints and perspectives of those involved, A/B testing is a form of experimentation that gives subscribers the opportunity to vote subconsciously with their actions on how to evolve the Netflix experience.

More specifically, A/B testing allows the teams to prove causality. Putting knowledge into more confidently making changes to the product knowing that subscribers have voted for them with their actions.

It also plays a key role in implementing an iterative learning process, alternating between deduction and induction to continuously optimize performance.

George Box’s diagram shows this process from deduction, going from an idea or theory to a hypothesis to actual observations/data that can be used to test the hypothesis, to induction, the process of generalizing from specific observations/data to new hypotheses or ideas.

Running these experiments

The hypothesis: If we make change X, it will improve the member experience in a way that makes metric Y improve.

Take a random sample subset of subscribers (the reasoning behind ‘random’ is explained below), evenly splitting this sample into two groups – the control and the treatment.

Both will receive a different experience based on the defined hypothesis.

Wait for the test to run its course before comparing the two groups based on a variety of predefined metrics.

Think carefully about your chosen metrics and what they convey

High click-through rate, for instance, may well imply that this flipped image is more inviting and entices more users to watch the title. However, it could also mean that the text is harder to read, so subscribers click on the title to read it more easily.

In this case, it might be valuable to also measure the percentage of subscribers who then leave that content after clicking vs those who go ahead and watch it.

Hold everything else constant

Coming back to why Netflix randomly selects users for their two groups (control and treatment), this plays an essential role in ensuring the groups are balanced on all dimensions that may influence the test results.

For instance, it ensures that the average length of Netflix subscription isn’t significantly different between the groups, nor that they have varying content preferences, primary language selection, etc. The only difference is the product experience being tested, meaning results clearly prove or disprove the hypothesis.

“A/B tests let us make causal statements. We’ve introduced the Upside Down product experience to Group B only, and because we’ve randomly assigned members to groups A and B, everything else is held constant between the two groups. We can therefore conclude with high probability…that the Upside Down product caused the reduction in engagement.”

Netflix Technology Blog

A real-life example from Netflix – it all starts with an idea

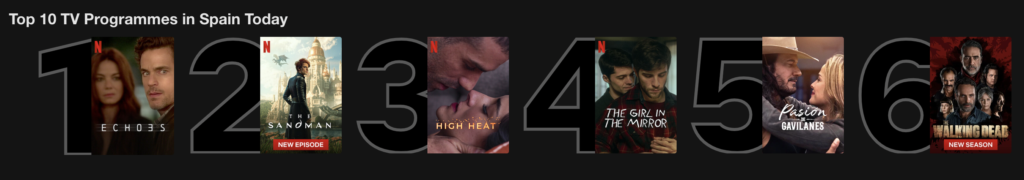

Did you know, the ‘Top 10’ titles section of your Netflix home screen was once just an idea that turned into a testable hypothesis.

The idea: surfacing titles that are popular in each country would positively impact our subscribers experience in 2 ways

- By presenting the most popular titles, Netflix could help members have a shared experience and connect with each other through conversations

- Supports subscriber’s decision-making process for choosing what to watch by fulfilling the intrinsic human desire to fit-in and be a part of a shared conversation

Turning the idea into a testable hypothesis:

Showing members the Top 10 experience will help them find something to watch, increasing member joy and satisfaction.

Choice of a primary decision metric: member engagement with Netflix (made up of a few metrics)

Secondary metrics included title-level viewing of those titles that appeared in the top 10 list, the percentage of viewing that originated from that ‘Top 10’ row vs other parts of the page, etc.

What’s more, the team always adds on some Guardrail metrics to limit any negative consequences that could arise from the test, as well as spot any product bugs. For instance, they might compare the number of contacts to customer service for the control and treatment groups, checking that the new feature isn’t increasing contact rate, which could indicate subscriber confusion or dissatisfaction.