By Elaine Piniat and Judith Langowski

A cornerstone of audience strategy is a/b testing. The media industry, which is frequently inundated by new and shiny tools and ways of telling stories, must constantly iterate to keep our audiences engaged and informed. It’s easy to get lost in the competition and tweaks to algorithms, leading to overthinking and overdeveloping. But a/b testing doesn’t have to be so complicated. If we keep the reader at the center and go back to the basics, we can see meaningful impact.

> Elaine’s speaking at The Audiencers’ Festival in London this June 24th! Reserve your spot here!

1. Focus on your goal

Every newsletter should serve a purpose, whether that is to drive revenue (ads or subscriptions), traffic, loyalty/retention, etc. Identify the result(s) that you’re trying to achieve and focus your a/b testing efforts on that.

We launched digital subscriptions to Reuters.com in the fall of 2024. While growing revenue via digital subscriptions is a priority, it’s unrealistic to expect that of every newsletter. Some newsletters are meant to be strong editorial products simply because of their newsworthiness. They’re inbox experiences that serve the readers, but we do expect them to lead to subscriptions and reduce churn. Others do a great job of driving users to the site to eventually hit the paywall and hopefully convert. The formats likely look different, which leaves room to experiment.

Last year, we a/b tested click-centric sections, driven by feeds, to see if we could drive more users to the site from narrative newsletters. This turned out to be extremely effective, increasing click through rate (CTR) across all newsletters. We also tested moving the click-centric section higher up in the newsletter, which always performed better in the a/b test.

The goal of those newsletters is still to generate an engaged and loyal audience, but we were able to find additional value in terms of bringing traffic to the site.

2. Sell your idea and build on the results

Some ideas are more involved and require significant changes or additional resources. You need to justify the change and work. That’s where a/b testing comes in. It’s less of a commitment and a good opportunity to show if the change is worth it.

For example, we were looking to introduce new newsletter sign-up modules and tested one successfully at the bottom of articles. We wanted to move the sign-up module to the top of articles, assuming this would increase newsletter subscriptions, but this would impact ad revenue.

We a/b tested it and found it performed 50% better than the original article sign-up link. Because the test was successful and we realized the impact on ad revenue would be acceptable and the uplift in newsletter subscribers and user engagement would be more valuable, we were able to justify permanently moving up the sign-up link.

Since doing so, clicks on the newsletter sign-up module increased by 63%, which was higher than we expected, with total newsletter sign-ups up by 9%. Our next step is to a/b test our sign-up page to drive more conversions. A/b testing is a continuous effort. You make one small change that influences the next.

> How Reuters and The New York Times use newsletters to drive loyalty, subscriptions, and revenue

3: Keep it simple

Ideas don’t have to be extravagant to matter. The smallest change can move the needle, and a series of simple a/b tests can have lasting impact.

Our earliest tests included basic subject line tests, where we learned that being witty when appropriate while maintaining Reuters standards, tapping into subscriber demographics and utilizing SEO keywords helps increase open rates.

Another test was removing images from list-centric automated newsletters. This increased CTR by 29-33% in four newsletters. We tested moving an image to the bottom of a narrative newsletter rather than the top, which increased CTR by 15%. We also tested the length of a newsletter that summarizes one story per day and found that shorter is better. These tests were nothing fancy, but were still an improvement. A/B testing subject lines, in particular, is useful because the learnings will impact the performance of future editions.

4. Learn from your peers

The best ideas come from our peers. It’s important that the media industry works together by sharing best practices and learnings.

Last year, at The Audiencers Festival, a peer from the Washington Post shared that they saw an increase in clicks after removing descriptions from list-centric newsletters. This was also confirmed by former colleagues at Newsday. We a/b tested this in three of our automated newsletters at Reuters, and increased CTR by an average of 59%. There’s no shame in learning from industry best practices.

5. Listen to your readers

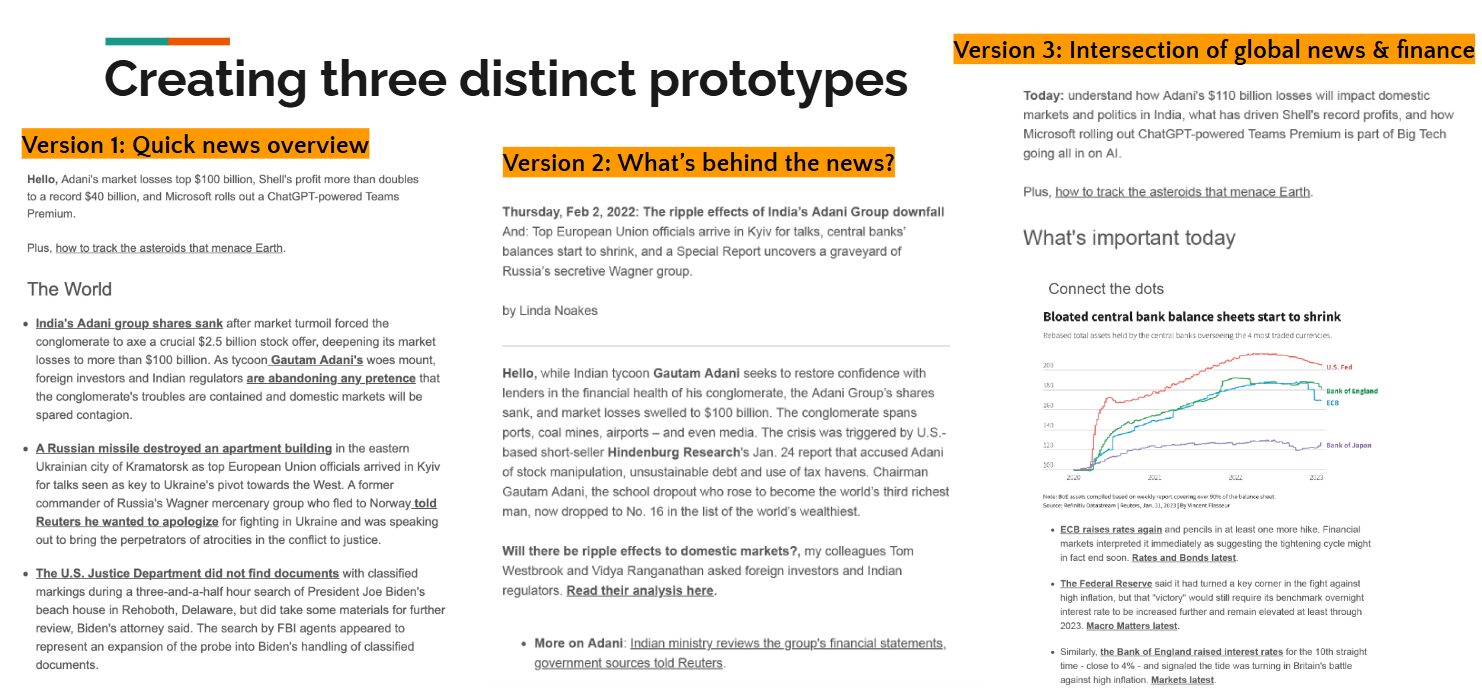

For the relaunch of our flagship newsletter, the Daily Briefing, we had an iterative process that involved prototype-building, surveying the audience, and reflecting regularly whether the changes were successful. Now, two years since we relaunched, it remains one of our most successful newsletters, with consistently high open and click rates. You can review our process in this presentation from ONA 2023.

Our objective was to give the newsletter a clearer design and bring our audience a digestible news overview. We drafted three prototypes of varying lengths and with different sections. Our UX team designed a survey, which more than 300 readers participated in and gave their feedback. The results were clear: a short and concise news overview won over prototypes that had more background information or deep dives into one specific news story. This prototyping experience influenced the development of other Reuters newsletters in our portfolio.

As our strategy continues to evolve, especially with a subscription model in mind, so will our a/b testing, but we have a solid foundation to build from. The point is to learn from the data and to remain curious.