Listening to articles rather than reading them, because we're in the car, our hands are busy cooking or we're in the middle of a sports session… if the idea seduced the industry at the time of the explosion of voice speakers and podcasts, the reality has, let's be frank, disappointed. Metallic voices, mispronunciation, monotone: our ears bled a lot. But that was 2017. Dazzling advances in text-to-speech technology have since made the experience acceptable and even enjoyable.

In early March 2024, the French daily Le Monde launched an audio version of all articles in its mobile app, a feature reserved for subscribers only. I caught up with Lomig Coulon, Lead Product Manager, and Julie Lelièvre, Deputy Audience Manager, who told The Audiencers about the birth of the project, its development and initial results.

Hello Lomig, Julie. First of all, could you tell us when and how this audio version project appeared in your roadmaps?

Julie et Lomig : Bonjour The Audiencers!

Of course, over the last few years we've seen the use of audio in France, via podcasts, develop, and in the US and UK, some media outlets are using actors to read articles. We kept an eye on this, noting the trend, without necessarily working on the subject.

It was in 2022, when we began redesigning our La Matinale mobile app, that the project took shape. The app's mission is to save readers time thanks to a smaller selection of news items. Audio meets this same time-saving need.

What's more, in our unsubscription forms, many subscribers told us that they loved Le Monde, but didn't have the time to read it, feeling that they weren't getting the most out of their subscription.

Our position was: “If they don't have time to read us, maybe they'll have time to listen to us!”

Let's talk goals: what is the purpose of developing this feature?

We've identified two:

- Firstly, to retain subscribers: to encourage them to use and consume content

- And also subscriber acquisition, by promoting this service reserved for subscribers only

In 2022, the scope of the project was limited to the La Matinale app, right?

Yes, that's right. La Matinale is a finished format, with engaged, loyal users and an already established product. And the fact that it's a selected list of 20 articles makes it easy for a reader to choose those that interest them and build their own ‘playlist' to listen to on their morning commute.

There's also less of an audience on La Matinale than on our main Le Monde application, which makes it a more fertile ground for experimentation and innovation.

While we opted for an iterative approach and initially restricted this functionality to La Matinale, from the outset we designed the player and article transcription mechanism to be re-usable on all our articles and in the main Le Monde app.

When you started out, did you make a distinction between free and paid articles, or between subscribed readers and those who aren't?

We've put a lot of thought into this, between reinforcing the value of our subscription by reserving the feature for paying readers, and bringing this new way of getting information to as many people as possible.

That's why, when we launched the feature in La Matinale, the audio functionality was available to all on both free and paid articles. In this way, non-subscribers could listen to the few free articles featured each day in the Matinale edition.

Finally, when we deployed the service on the main Le Monde app, we reserved it for subscribers only. So we re-aligned La Matinale on the same principle.

> To read next: Le Monde launches new mobile app feature to combat news avoidance & information fatigue

How did you choose the text-to-speech tech solution?

We quickly eliminated the option of a human reading. We publish over 100 articles a day and wanted to offer the audio version as soon as it was published, so we didn't have the time required to have them read by actors, record the voices, and so on. In this context, the solution of synthesized voice was obvious.

We studied various solutions: Murf, Odia, Remixd, Voxygen, Google, ETX Studio, Read Speaker and Microsoft Azure Cognitive Service.

In the end, we opted for the Microsoft solution, based on the primary criterion of voice quality. At the time, they had the most convincing French voice.

We're continuing to keep a close eye on the progress of speech synthesis technologies, both from Microsoft and other players, because things are moving very fast in this field of generative AI.

In addition to the question of voice, there's that of broadcasting. Which audio player did you choose?

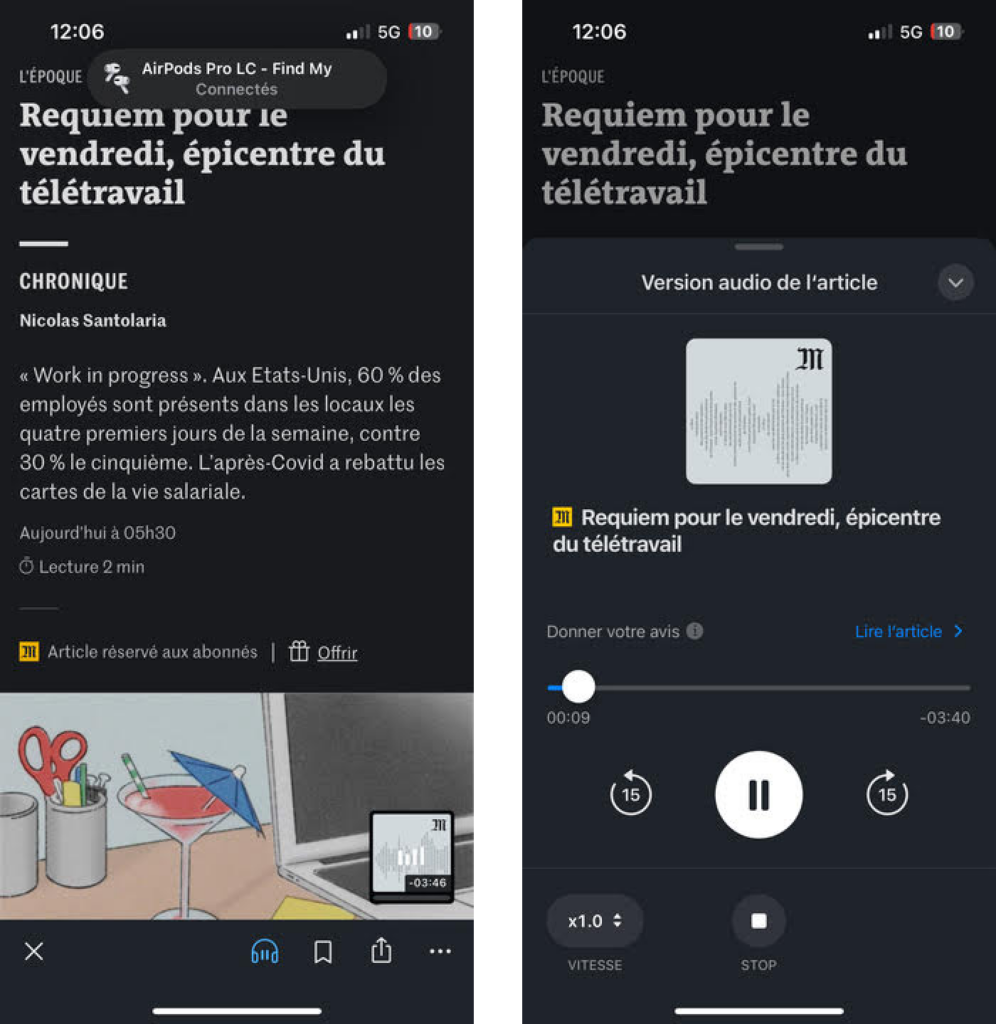

This project enabled us to develop our own native player for our mobile app. Previously, we had been using an “embed” web player, which was disappointing on several levels: no playback continuity outside navigation, few functionalities linked to reading comfort…

Today, our readers can reduce or enlarge the audio player, retrieve the link to the article being read, vary the playback speed and, above all, continue listening while browsing or when the phone is locked.

The new audio player, in a mini (left) and large (right) format.

“We were surprised by the use of reading speed! Much more than we thought…”

In concrete terms, how do you go about transcribing written articles into audio?

For the project in the long-term, we did two things:

- Create our own custom synthesized voices with actors. We now have 4 custom voices for “Le Monde”. We chose to alternate male and female voices to hold readers' attention throughout the paragraphs

- Our official composer, Amandine Robillard, has created various sound layers to add a musical layer to our text-to-speech, which we use to introduce our sounds.

And on a daily basis:

- Le Monde sends Microsoft the text and a number of settings. For example, we explain that there are portions to be read and not read (such as intertitles or what's in square brackets), the number of voices to be used, etc…

- Microsoft sends us back an audio file with our rules applied

- Our in-house tool adds an introduction “You are listening to an article from Le Monde” and the sound track

- The file then arrives in Sirius, our in-house CMS, and is published as is

At first, of course, we listened to the files received one by one, to correct the pronunciation of proper nouns, Roman numerals, hyphenated words, etc. Today, this is no longer the case: the audio version goes straight into production. We only correct if readers point out errors.

Which pronunciation issues were the most difficult to deal with?

We have created our own dictionary, some examples of special cases:

- The final “t” in “jet d'eau” vs. “jets privés”.

- Jean the first name vs jean, the pants

- Dix and six (ten and six) final “s” to be pronounced or not, as in the French accent)

A typical case of mishandling is the uppercase letter X for the new Twitter name, where the voice says 10. We knew there would be a few errors of this type: we accept a margin of imperfection!

The French voices are still improving, but it's not bad. A few readers have been fooled into thinking they were listening to human voices!

However, it should be pointed out that this was not our intention, and our apps clearly state that the voice is a synthesized one.

What were your initial results?

On La Matinale, reader engagement increased significantly, but as we observed in the post-redesign phase, it's hard to know whether it's the feature that generates engagement, or engagement that generates use of the feature.

In spring 2023, you decide to launch this service in the main Le Monde app, but on a very small percentage of the audience…

Yes, at that point we embarked on a huge A/B test lasting 1 year, the deployment and results of which we monitored via the Amplitude product analysis platform.

In concrete terms, we took 25% of the main app's audience, and divided it into 8 cohorts:

- 4 control cohorts with no access to audio

- 4 test cohorts with access to audio

And all 8 are composed in the same way, to ensure that both cohorts follow the same pattern. To confirm that the test is viable, we waited for the 4 test cohorts to follow the same variations.

From a functional point of view, where is the feature presented to users?

The feature is displayed on the article page, in the bottom bar, whereas in La Matinale, the reader has access to it from the news selection, one page before.

Les 2 maquettes ici

But we realize that in the main app the feature is struggling to catch on. It probably lacks exposure, because after clicking on the article title, users are reading rather than listening.

We did some user testing and found that our readers hadn't even seen the audio option!.. We needed to expose this feature more.

Of course, we have a slight challenge here because we were in the middle of A/B testing, we couldn't do massive communication on the topic, just send out a few in-app pushes.

So how do you go about showing it to more users?

We're re-doing an in-app push to all beta-testers, with an A/B/C/D test on the text:

The 4 variations of our Batch push notifications

And here we see a good boost in usage, with stability over time. Clearly, the success of this feature also depends greatly on its exposure.

So we decided to display the audio playback button directly on the feed pages (homes, section home pages…), and usage exploded. So we'd proved that there's a commitment to the feature, we just had to get it out there!

In March 2024, you launched the feature on 100% of the audience on your main app…

Yes, indeed. Here's what our subscribers see – on the feed pages (left), on the article page (right):

And below, two screenshots of the subscription incentive at listening launch from a feed page (left) and from an article page (right):

What are the first usage statistics?

Among subscribers who use the application, 30% have used the feature at least once a month since its launch.

We find that readers who use the feature are 1.7 times more engaged than other readers (in terms of number of articles read).

Have you already observed any effects on subscriber retention?

For the moment, no, not really. Those who listen don't necessarily remain subscribers longer. On the other hand, we have noted an effect on engagement: those who listen read more articles!

Does this mean that if more articles are read, then the subscription is stronger? It's hard to say at this stage.

How do you study the usage of your readers/listeners?

We obviously have a whole tagging plan for quantity metrics (where is the feature used, how much of the audio is completed…).

On the quali side, we've set up a feedback form in the app, and another in the player.

We're looking for two types of feedback:

- Is there a problem with the quality of the audio: mispronunciation, a too robotic voice…?

- And more general feedback: is it easy to use, what do you think of the sound design…?

What did you learn?

First, a figure: 80% of users say they are “satisfied” or “very satisfied” with the functionality.

Then, of course, most of the feedback is very pleasing…

“It's really interesting to be able to listen to long articles, it's a great idea!”

“I listen to a lot of podcasts and I greatly appreciate being able to listen to my articles from Le Monde”

“Bravo! I've rarely seen such good integration of a synthetic voice! “

Related to use

“Great initiative that allows me to listen whilst walking!”

“Being able to listen to articles in the shower or in the car”

Accessibility

“Hello. Bravo for this feature, it's great. I'm blind and I love using it while doing my sport.”

“Thank you for the dyslexics who can finally listen to you.”

Subscription

“I think it's great and it's this feature that convinced me to subscribe!”

On the other hand, there's not much information about where you read. Are we more listened to in the car or doing the dishes?

Are you looking to prove the return on investment of this development?

This audio version of articles is above all a question of innovation and experimentation. It's about developing a new usage over the medium/long term, so we're not expecting a return on investment in year 1.

It should be noted that this project was carried out thanks to the Google News Initiative, which enabled us to finance a large part of the initial developments. For the moment, we have no plans for further developments, but we do have operating costs (Azure license and audio generation with our Le Monde voices).

On the revenue side, as this is a subscriber-only feature, we can of course calculate the conversion rate on the paywall and track the extent to which the feature contributes to subscriber recruitment and retention. Finally, it is technically possible to monetize these audios through advertising, but this is not yet the case. However, our agency is considering developing this possibility in the future.